MSC: A Marine Wildlife Video Dataset with Grounded

Segmentation and Clip-Level Captioning

MSC: A Marine Wildlife Video Dataset with Grounded

Segmentation and Clip-Level Captioning

Quang-Trung Truong1, Wong Yuk Kwan1, Vo Hoang Kim Tuyen Dang2, Rinaldi Gotama3, Duc Thanh Nguyen4, Sai-Kit Yeung1

1HKUST, 2HCMUS, 3Indo Ocean Project, 4Deakin University

ACM International Conference on Multimedia (ACMMM Datasets) 2025Abstract

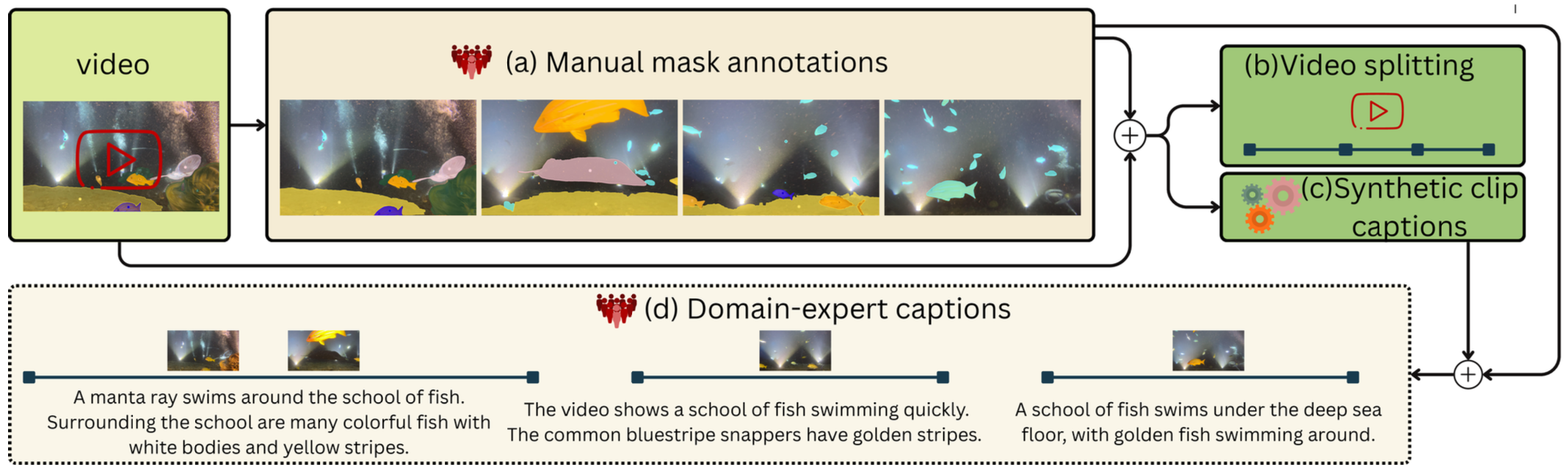

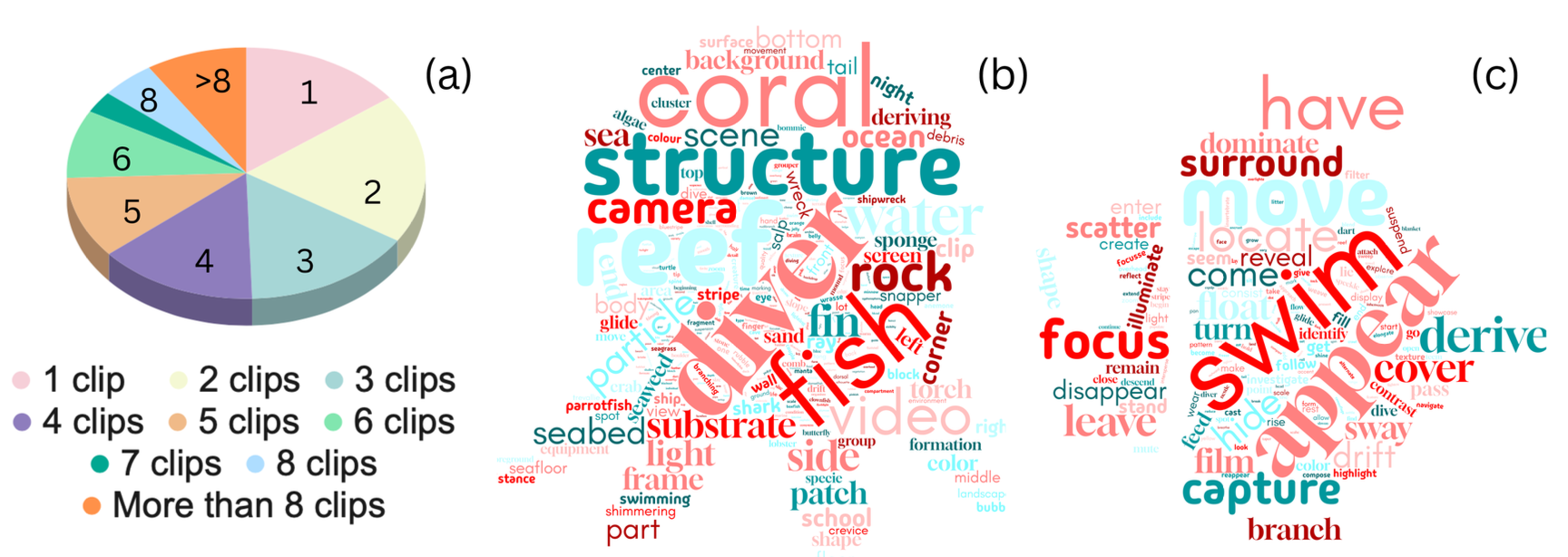

Marine videos present significant challenges for video understanding due to the dynamics of marine objects and the surrounding environment, camera motion, and the complexity of underwater scenes. Existing video captioning datasets, typically focused on generic or human-centric domains, often fail to generalize to the complexities of the marine environment and gain insights about marine life. To address these limitations, we propose a two-stage marine object-oriented video captioning pipeline. We introduce a comprehensive video understanding benchmark that leverages the triplets of video, text, and segmentation masks to facilitate visual grounding and captioning, leading to improved marine video understanding and analysis, and marine video generation. Additionally, we highlight the effectiveness of video splitting in order to detect salient object transitions in scene changes, which significantly enrich the semantics of captioning content.

Dataset

We sampled 10 videos from the MSC dataset. Readers can download this subset of the dataset via this link.

Data Pipeline and Statistics

MSC Annotation Generation

Overview of the MSC dataset

Clip-level Captioning

Citation

@article{truong2025msc,

title = {MSC: A Marine Wildlife Video Dataset with Grounded Segmentation and Clip-Level Captioning},

author = {Truong, Quang-Trung and Wong, Yuk-Kwan and Dang, Vo Hoang Kim Tuyen and Gotama, Rinaldi and

Nguyen, Duc Thanh and Yeung, Sai-Kit},

journal = {arXiv preprint arXiv:2508.04549},

year = {2025}

}